By now, most of us should know that the audio clip in the center of the “Yanny vs Laurel” debate was voicing the word “laurel”, but close to half the population out there have heard it as “yanny”.

Okay, pause a moment if you do not know what I’m talking about here; you can get up to speed quickly here.

According to Wired Magazine , this “Yanny vs Laurel” debate started with a high school girl looking up the pronunciation for the word “laurel” in Vocabulary.com

Click below to have a listen to the actual source recording: (https://www.vocabulary.com/dictionary/laurel)

Given the clarity of the source recording from Vocabulary.com, do you still have any trouble distinguishing whether it was “yanny” or “laurel”?

The reason this could even turn into a contentious global debate (with even Donald Trump weighing in on the issue) started from a recording posted on reddit .

The audio recording posted in reddit was of poorer quality, likely to be played off a laptop and recorded using a mobile phone.

There’s no black magic voodoo here. The discrepancy in what people were hearing was primarily a case of how much bass frequencies that their ears were picking up.

People who did not register much of the low frequencies from the clip would have heard the word as “yanny”.

Those who could perceive the low frequencies would have relatively less issue identifying the word as “laurel”.

Looking at the spectrogram below of the original recording of “laurel” taken from vocabulary.com, you can see the concentration of strong energy in the lower frequency range (indicated by the bright yellow regions).

When you filtered the low frequencies away, much of the defining frequencies for the word “laurel” are removed.

Use this neat slider tool from New York Times here to see how the sound changes as the bass frequencies are being filtered out as you push the slider to the right.

The “laurel” audio clip on reddit was lacking in the lower frequency range for a couple of reasons.

Firstly, you might be aware – a laptop speaker could not produce a decent amount of bass frequencies with clarity.

A mobile device also could not record much bass frequencies given the small size of its microphone diaphragm.

The recording on reddit was simply a sound with energy clustered around the mid to upper-mid frequency range – limited in both audio depth and spectrum.

The fundamental sound issue here is almost akin to the lack of full-spectrum intelligibility of audio coming out of the tiny bezel speakers in modern flat-screen LED TVs these days.

Except that the reddit audio quality was already poorly recorded to begin with.

So yes, all this debate arose out of nothing but for a poor quality recording, or what some call an “acoustically ambiguous clip”.

But there’s something more.

How do we explain why some people have this “filtering” effect in their ears while others do not?

In my years of work as an audio engineer, I have noticed that younger engineers, in spite of their training, tend to have problems discerning lower frequencies.

And I’m not talking about the ears’ ability in picking up bass guitars or kick drums in music tracks.

Even when listening to just a voice over recording, many young studio engineers tend not to be able to clean out many of the pops and thuds that occur in the low frequency range during post-production.

I used to think it could be an age-related issue. The older you get, the more you lose the higher frequency receptor hair cells in our ears, and hence your ability to pick out bass frequencies gets relatively better.

But looking at how wide an age range “Yanni” supporters are spanning, age might not matter as much as I had assumed.

Is it possible that some of us may just be naturally more attuned to the mid / high-mid frequencies than others when it comes to processing the sound waves that enter their ears?

To understand why this may be so, let’s take a quick look at how our brains process auditory signals.

How Our Brain “Hears”

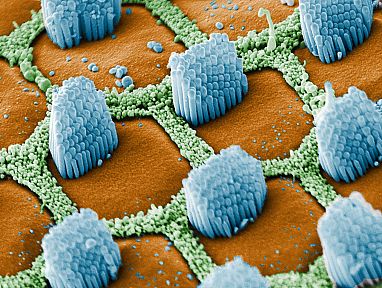

There are roughly about 16,000 hair cells in our ear canals responsible for picking up different narrow bands within the frequency range between 20 to 20000 hz.

What’s interesting is that these hair cells are neatly lined up from the outer ear to inner ear according to the frequencies they detect, much like an EQ frequency analyser.

The hair cells in the outer ear are most sensitive to the high frequencies while those in the inner ear are most sensitive to the lower frequencies.

What research has shown is that the sensitivity of low frequency response in these hair cells is related to the presence of a certain protein (by the name of Bmp7) during the early embryonic stage of our cells.

In an experiment done with chick embryos, it was found that decreasing levels of the Bmp7 protein would result in gradual attunement to the higher frequencies instead of lower ones.

It’s possible then that some people are just naturally born with less sensitivity in their low frequency response than others.

As a result, their ears (or more accurately, the brain that is interpreting the signals from these hair cells) also develop habitual tendencies (or “neural pathways”, to be totally geeky about brain science) to zoom in more on the mid and upper-mid frequencies when processing sounds.

If you were feeling adamant that the clip is “definitely” “yanny” (or vice versa) and couldn’t understand why people could even hear otherwise, think back about the “blue vs gold” dress debate from a couple of years ago.

Everyone’s brain is wired differently both as a result of genetics and the environment and culture we grew up in.

Let’s not forget also that the word “Yanny” was suggested to us as a split-choice, before we even heard the audio file.

To a certain degree, our ears (and brain) were set up with a bias to only choose between “laurel” and “yanny”.

Upon hearing the clip, your brain would make a split decision to pick a side (depending on your habitual level of low-frequency response) and fill up the rest of the “missing” sonic info from the poorly recorded audio.

There are many scientific experiments that would go to show how prone our brains are to misdirection and suggestions, like some of the audio illusions presented in this video.

Check out the first illusion in the video above.

Did the guy say “bar” or “far” if you listen to it with your eyes closed?

This “laurel vs yanny” debacle is another example that shows we sometimes hear only what we already intended to hear.

The takeaways I had from this crazy “yanny vs laurel” global divide are:

#1 A poorly recorded audio can become a global hit under the guise of being “acoustically ambiguous”.

But this is probably a “one-hit wonder” that would be difficult to replicate.

So, please don’t try recording off your mobile phone for your next hit project.

#2 What your audience would hear is more important than what is being recorded.

What the brain expects to hear can create pre-filters that influence how your recording is going to be perceived.

Having an intuitive understanding of your audience is key in helping you craft and manipulate the sounds for your ads and videos in a way that you had intended.

Such an intuitive understanding is where the skills of recording intersect in a tiny space as both an creative art and a methodical science — like the minority out there who hears both “yanny” and “laurel” at the same time in that acoustically ambiguous audio clip.

Did you? If so, join our team.